Introduction

Theorem proving is hard for ML because there are not enough human-written proofs in formal languages. Geometry is especially difficult because translating human geometric proofs into machine-verifiable form is very hard.

Current geometry provers rely mainly on symbolic rules and hand-crafted heuristics, not learning. The authors solve the data shortage by generating 100 million synthetic theorems and proofs using symbolic engines.

They introduce dependency difference, which helps create nearly 10 million steps of auxiliary constructions. Auxiliary construction means adding new helper points; this creates infinite branching and is the hardest part of geometry proofs. The synthetic data lets the model learn auxiliary constructions without human demonstrations.

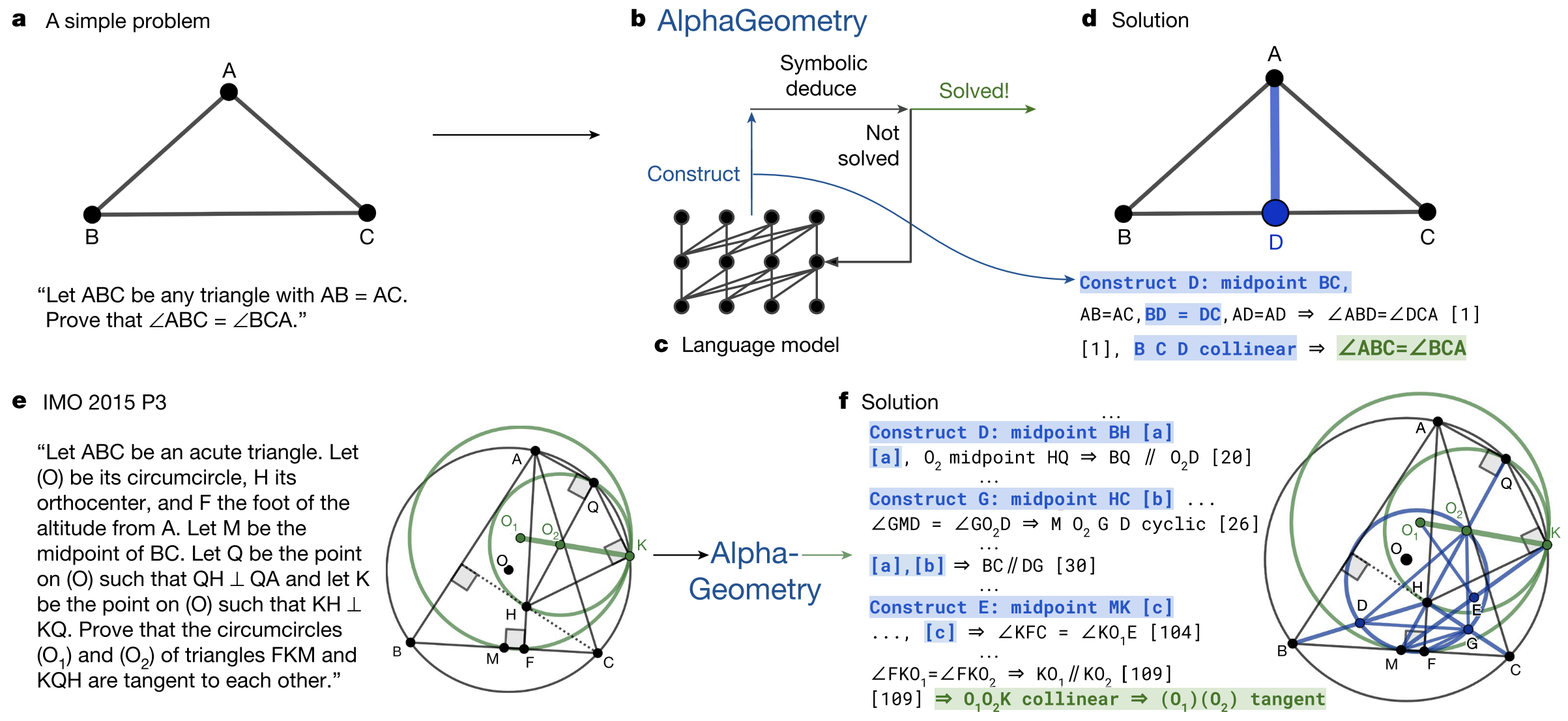

They train a language model on all synthetic proofs and fine-tune it to focus on auxiliary constructions. The symbolic engines handle all normal deduction steps, while the neural model generates new proof terms.

The resulting system, AlphaGeometry, proves geometry problems and produces human-readable proofs, performing close to an IMO gold medalist level.

Method

Generate synthetic geometry data

- Random simple premises → symbolic engine → 100M synthetic theorems + proofs.

- Symbolic Engine is built manually:

- Deductive Database (DD) → Databse of deductive rules (symbolic rules)

- Algebraic Reasoning (AR) → Gaussian method (algebraic solver)

Extract auxiliary constructions:

- Use dependency difference to find extra helper points (~10M).

Compare the conclusion with its minimal required premises. Any extra points used along the way are treated as auxiliary constructions (~10M examples). These teach the model how to add helpful new points.

Train a Transformer model on serialized proofs.

Feed it the serialized form of each synthetic theorem:

The model learns how proofs and helper constructions look.

Neuro-symbolic proof search:

- The symbolic engine tries to prove the input problem using known rules.

- If it cannot finish, the language model proposes a new helper point.

- Add the point → try symbolic engine again → repeat until solved.

Novelty

Generate all training data synthetically

→ No human proofs needed.

Introduce “dependency difference”

→ Automatically learn auxiliary constructions (helper points).

Neuro-symbolic loop

- Symbolic engine deduces

- Neural model adds new constructions when stuck.

These three ideas together let AlphaGeometry reach near IMO gold-medalist level in geometry.